a

Node.js and Express

In this part, our focus shifts towards the backend - the server side of the stack.

We will be building our backend on top of NodeJS, which is a JavaScript runtime based on Google's Chrome V8 JavaScript engine.

This course material was thoroughly tested with version 18.3.2 of Node.js, but most of it has been switched to use 22.21.1. If you are using NVM, you can always switch, but you can also just stick with 22.21 for now.

FYI: you can check the version by running

node -vin the command line. You can also check your nvm options by typingnvm list,nvm installto install a new version of node, andnvm useto switch that version.

As mentioned in part 1, browsers don't yet support the newest features of JavaScript, and that is why the code running in the browser must be transpiled with e.g. babel. The situation with JavaScript running in the backend is different. The newest version of Node supports a large majority of the latest features of JavaScript, so we can use the latest features without having to transpile our code.

Our goal is to implement a backend that will work with the tasks application from part 2.

Setup for part 3

Before we get started with writing code, we need to make a few adjustments to our reading docs.

What I'd like you to do is to open your previous repo that has the reading folder in it in WebStorm. Once that project is open, create a new folder at the same level as your reading folder. Name this new folder backend-reading.

We are also going to duplicate a file watcher since we will mostly be working in Javascript.

Please go to WebStorm's Settings (Ctrl-Alt-S).

Navigate to Tools->File Watchers.

Highlight the existing COMP 227 Git Watcher and click the copy button in the small toolbar up top.

Clicking that button, will launch the New File Watcher window.

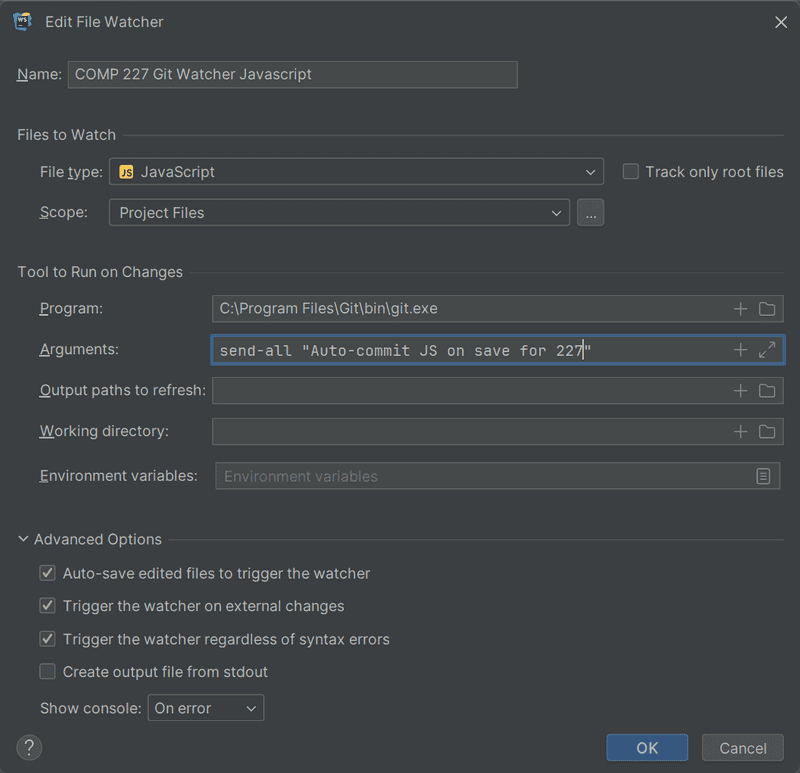

Change the name to COMP 227 Git Watcher Javascript

Select the File type as JavaScript

and change the argument to say: send-all "Auto-commit JS on save for 227"

It should look like this:

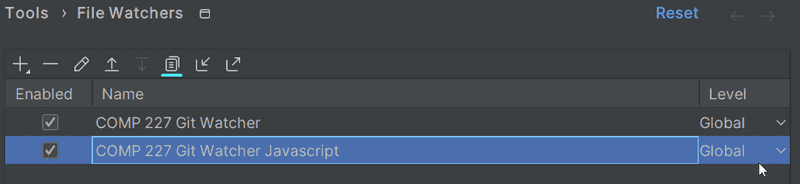

Click OK, and ensure that both rules are selected and have the Global level.

Then click OK again to close the settings.

However, let's start with the basics by implementing a classic "hello world" application.

Notice that the applications and exercises in this part are not all React applications, and we will not use the

create vite@latest -- --template reactutility for initializing the project for this application.

We had already mentioned npm back in part 2, which is a tool used for managing JavaScript packages. In fact, npm originates from the Node ecosystem.

Let's navigate to the backend-reading directory we created above using Terminal, and create a new template for our application using a different initialization technique. Assuming you are at the best of your repository:

cd backend-reading

npm initWe will answer the questions presented by the npm init utility by pressing Enter to accept most of the defaults, except Author.

The result will be an automatically generated package.json file at the root of the project that contains information about the project.

{

"name": "backend-reading",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"author": "Osvaldo Jiménez",

"license": "ISC"

}The file defines, for instance, that the entry point of the application is the index.js file.

Let's make a small change to the scripts object by adding a new script command:

{

// ...

"scripts": {

"start": "node index.js", "test": "echo \"Error: no test specified\" && exit 1"

},

// ...

}Next, let's create the first version of our application by adding an index.js file to the root of the backend-reading folder with the following code:

console.log("hello comp227");At this point, verify that your file watcher has committed the files, which can be done by typing git status in the repo.

Please also git push your code here.

Do not continue until your file watcher is working.

We can run the program directly with Node from the command line:

node index.jsOr we can run it as an npm script:

npm startThe start npm script works because we defined it in the package.json file:

{

// ...

"scripts": {

"start": "node index.js",

"test": "echo \"Error: no test specified\" && exit 1"

},

// ...

}Even though the execution of the project works when it is started by calling node index.js from the command line,

it's customary for npm projects to execute such tasks as npm scripts.

By default, the package.json file also defines another commonly used npm script called npm test.

Since our project does not yet have a testing library, the npm test command simply executes the following command:

echo "Error: no test specified" && exit 1Simple web server

Let's change the application into a web server by editing the index.js file as follows:

const http = require("http");

const app = http.createServer((request, response) => {

response.writeHead(200, { "Content-Type": "text/plain" });

response.end("Hello COMP227!");

});

const PORT = 3001;

app.listen(PORT);

console.log(`Server running on port ${PORT}`);Once you start the application again, the following message is printed in the console:

Server running on port 3001Warning: if port 3001 is already in use by some other application, then starting the server will result in the following error message:

➜ hello npm start > [email protected] start /Users/powercat/comp227/part3/hello<br/> > node index.js Server running on port 3001 events.js:167 throw er; // Unhandled 'error' event ^ Error: listen EADDRINUSE :::3001 at Server.setupListenHandle [as _listen2] (net.js:1330:14) at listenInCluster (net.js:1378:12)You have two options. Either shut down the application using port 3001 (json-server in the last part of the material was using port 3001), or use a different port for this application.

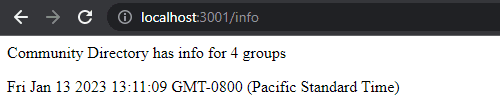

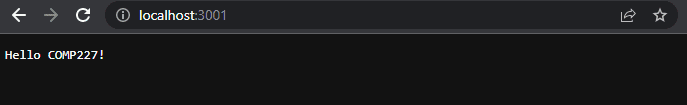

We can open our humble application in the browser by visiting the address http://localhost:3001:

The text is rendered in black and white because my system is in dark mode. The server works the same way regardless of the latter part of the URL. Also the address http://localhost:3001/foo/bar will display the same content.

Let's take a closer look at the first line of the code:

const http = require("http");In the first row, the application imports Node's built-in web server module. This is practically what we have already been doing in our browser-side code, but with a slightly different syntax:

import http from "http";These days, code that runs in the browser uses ES6 modules.

Modules are defined with an export

and used by other files with an import.

Node.js uses CommonJS modules. The reason for this is that the Node ecosystem needed modules long before JavaScript supported them in the language specification. Currently, Node also supports the use of ES6 modules, but we'll stick to the CommonJS modules.

CommonJS modules function almost exactly like ES6 modules, at least as far as our needs in this course are concerned.

The next chunk in our code looks like this:

const app = http.createServer((request, response) => {

response.writeHead(200, { "Content-Type": "text/plain" });

response.end("Hello COMP227!");

});The code uses the createServer method of the http module to create a new web server.

An event handler is registered to the server that is called every time an HTTP request is made to the server's address http://localhost:3001.

The request is responded to with the status code 200,

with the Content-Type header set to text/plain, and the content of the site to be returned set to Hello COMP227.

The last rows bind the http server assigned to the app variable, to listen to HTTP requests sent to port 3001:

const PORT = 3001;

app.listen(PORT);

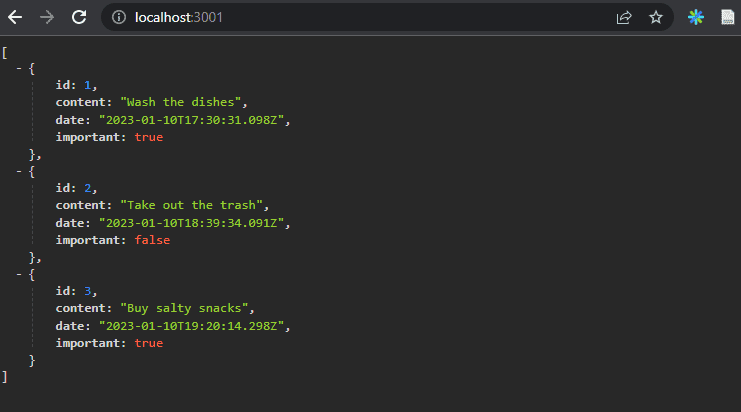

console.log(`Server running on port ${PORT}`);The primary purpose of the backend server in this course is to offer raw data in JSON format to the frontend. For this reason, let's immediately change our server to return a hardcoded list of tasks in the JSON format:

const http = require("http");

let tasks = [ { id: 1, content: "Wash the dishes", date: "2023-01-10T17:30:31.098Z", important: true }, { id: 2, content: "Take out the trash", date: "2023-01-10T18:39:34.091Z", important: false }, { id: 3, content: "Buy salty snacks", date: "2023-01-10T19:20:14.298Z", important: true }];const app = http.createServer((request, response) => { response.writeHead(200, { "Content-Type": "application/json" }); response.end(JSON.stringify(tasks));});

const PORT = 3001;

app.listen(PORT);

console.log(`Server running on port ${PORT}`);Let's restart the server (you can shut the server down by pressing Ctrl+C in the console) and let's refresh the browser.

The application/json value in the Content-Type header informs the receiver that the data is in the JSON format.

The tasks array gets transformed into JSON with the JSON.stringify(tasks) method.

This is necessary because the response.end() method expects a string or a buffer to send as the response body.

When we open the browser, the displayed format is exactly the same as in part 2 where we used json-server to serve the list of tasks:

Express

Implementing our server code directly with Node's built-in http web server is possible. However, it is cumbersome, especially once the application grows in size.

Many libraries have been developed to ease server-side development with Node, by offering a more pleasing interface to work with the built-in http module. These libraries aim to provide a better abstraction for general use cases we usually require to build a backend server. By far the most popular library intended for this purpose is Express.

Let's take Express into use by defining it as a project dependency with the command:

npm i expressThe dependency is also added to our package.json file:

{

// ...

"dependencies": {

"express": "^5.2.1"

}

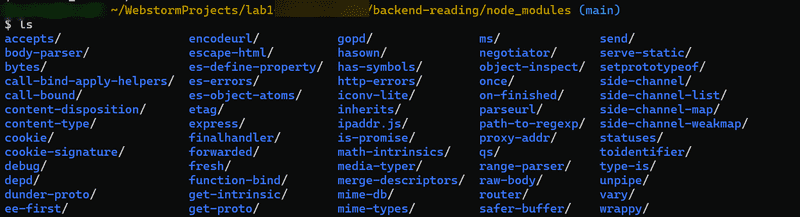

}The source code for the dependency is installed in the node_modules directory located at the root of the project. In addition to Express, you can find a great number of other dependencies in the directory:

These are the dependencies of the Express library and the dependencies of all of its dependencies, and so forth. These are called the transitive dependencies of our project.

Version 5.2.1 of Express was installed in our project. What does the caret in front of the version number in package.json mean?

"express": "^5.2.1"The versioning model used in npm is called semantic versioning.

The caret in the front of ^5.X.Y means that if and when the dependencies of a project are updated,

the version of Express that is installed will be at least 5.X.Y.

However, the installed version of Express can also have a larger patch number (the last number),

or a larger minor number (the middle number).

The major version of the library indicated by the first major number must be the same.

We can update the dependencies of the project with the command:

npm updateLikewise, if we start working on the project on another computer,

we can install all up-to-date dependencies of the project defined in package.json by running this next command in the project's root directory:

npm iIf the major number of a dependency does not change, then the newer versions should be backwards compatible. This means that if our application happened to use version 5.99.175 of Express in the future, then all the code implemented in this part would still have to work without making changes to the code. In contrast, the future 6.0.0 version of Express may contain changes that would cause our application to no longer work.

Web and Express

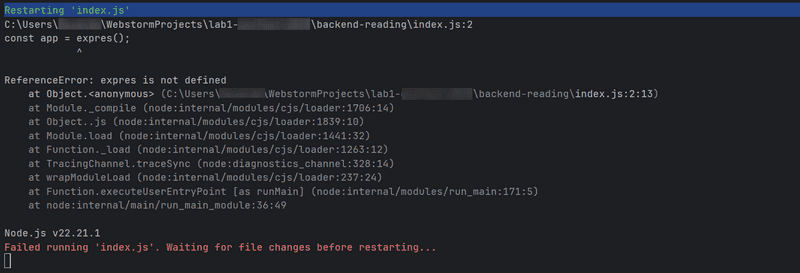

Let's get back to our application and make the following changes in index.js:

const express = require("express");

const app = express();

let tasks = [

...

];

app.get("/", (request, response) => {

response.send("<h1>Hello COMP227!</h1>");

});

app.get("/api/tasks", (request, response) => {

response.json(tasks);

});

const PORT = 3001;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});To get the new version of our application into use, first we have to restart it.

The application did not change a whole lot.

Right at the beginning of our code, we're importing express,

which this time is a function that is used to create an Express application stored in the app variable:

const express = require("express"); const app = express();

Next, we define two routes to the application.

The first one defines an event handler that is used to handle HTTP GET requests made to the application's / root:

app.get("/", (request, response) => { response.send("<h1>Hello COMP227!</h1>"); });

The event handler function accepts two parameters.

The request parameter contains all of the information of the HTTP request,

and the response parameter is used to define how the request is responded to.

In our code, the request is answered by using the send method of the response object.

Calling the method makes the server respond to the HTTP request by sending a response containing the string <h1>Hello COMP227!</h1> that was passed to the send method.

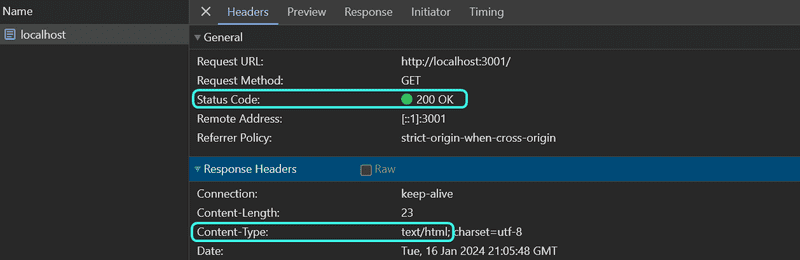

Since the parameter is a string, Express automatically sets the value of the Content-Type header to be text/html.

The status code of the response defaults to 200.

We can verify this from the Network tab in developer tools:

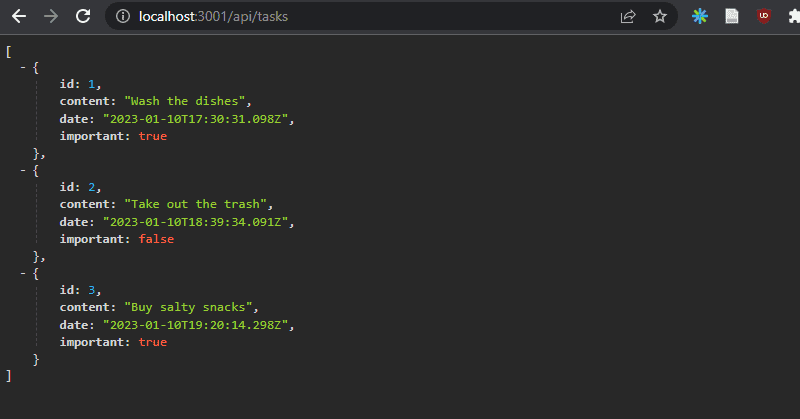

The second route defines an event handler that handles HTTP GET requests made to the tasks path of the application:

app.get("/api/tasks", (request, response) => {

response.json(tasks);

});The request is responded to with the json method of the response object.

Calling the method will send the tasks array that was passed to it as a JSON formatted string.

Express automatically sets the Content-Type header with the appropriate value of application/json.

Next, let's take a quick look at the data sent in JSON format.

In the earlier version where we were only using Node, we had to transform the data into the JSON formatted string with the JSON.stringify method:

response.end(JSON.stringify(tasks));With Express, this is no longer required, because this transformation happens automatically.

It's worth noting that JSON is a data format.

However, it's often represented as a string and is not the same as a JavaScript object, like the value assigned to tasks.

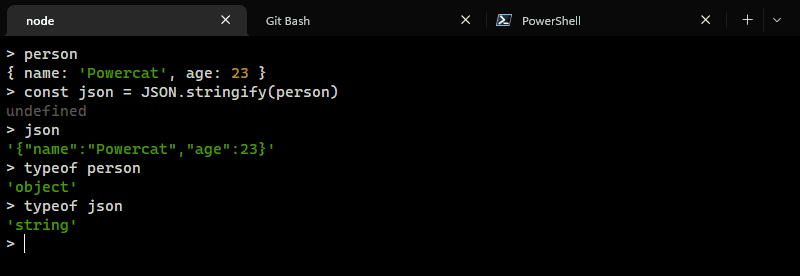

The experiment shown below illustrates this point:

The experiment above was done in the interactive node-repl.

You can start the interactive node-repl by typing in node in the command line.

The repl is particularly useful for testing how commands work while you're writing application code.

To get out of node-repl, type .exit.

I highly recommend this!

Automatic Change Tracking

If we change the application's code, we first need to stop the application from the console (Ctrl+C) and then restart it for the changes to take effect. Restarting feels cumbersome compared to React's smooth workflow, where the browser automatically updates when the code changes.

You can make the server track our changes by starting it with the --watch option:

node --watch index.jsNow, changes to the application's code will cause the server to restart automatically. Notice that although the server restarts automatically, you still need to refresh the browser. Unlike with React, we do not have a hot reload functionality that updates the browser in this scenario (where we return JSON data).

FYI: We don't have, nor could we have such hot reload functionality. Feel free to think about why that is the case.

Let's define a custom npm script in the package.json to start the development server:

{

// ..

"scripts": {

"start": "node index.js",

"dev": "node --watch index.js", "test": "echo \"Error: no test specified\" && exit 1"

},

// ..

}We can now start the server in development mode with the command:

npm run devFYI: Unlike the start and test scripts, we have to add

runto the command because it is a non-native script.

REST

Let's expand our application so that it mimics json-server's RESTful HTTP API.

Representational State Transfer, aka REST, was introduced in 2000 in Roy Fielding's dissertation. REST is an architectural style meant for building scalable web applications.

We are not going to dig into Fielding's definition of REST or spend time pondering about what is and isn't RESTful. Instead, we take a more narrow view by only concerning ourselves with how RESTful APIs are typically understood in web applications. The original definition of REST is not even limited to web applications.

We mentioned in the previous part that singular things, like tasks in the case of our application, are called resources in RESTful thinking. Every resource has an associated URL which is the resource's unique address.

One convention for creating unique addresses is to combine the name of the resource type with the resource's unique identifier.

Let's assume that the root URL of our service is www.example.com/api.

If we define the resource type of task to be tasks,

then the address of a task resource with the identifier 10, has the unique address www.example.com/api/tasks/10.

The URL for the entire collection of all task resources is www.example.com/api/tasks.

We can execute different operations on resources. The operation to be executed is defined by the HTTP verb:

| URL | verb | functionality |

|---|---|---|

| tasks/10 | GET | fetches a single resource |

| tasks | GET | fetches all resources in the collection |

| tasks | POST | creates a new resource based on the request data |

| tasks/10 | DELETE | removes the identified resource |

| tasks/10 | PUT | replaces the entire identified resource with the request data |

| tasks/10 | PATCH | replaces a part of the identified resource with the request data |

This is how we have roughly defined a uniform interface, which means a consistent way of defining interfaces that makes it possible for systems to cooperate.

FYI: This way of interpreting REST falls under the second level of RESTful maturity in the Richardson Maturity Model. According to the definition provided by Roy Fielding, we have not defined a REST API. In fact, a large majority of the world's purported "REST" APIs do not meet Fielding's original criteria outlined in his dissertation.

In some places (e.g. Richardson, Ruby: RESTful Web Services) you will see our model for a straightforward CRUD API, being referred to as an example of resource-oriented architecture instead of REST.

Fetching a single resource

Let's expand our application so that it offers a REST interface for operating on individual tasks. First, let's create a route for fetching a single resource.

The unique address we will use for an individual task is of the form tasks/10, where the number at the end refers to the task's unique id number.

We can define parameters for routes in Express by using the : syntax:

app.get("/api/tasks/:id", (request, response) => {

const id = request.params.id;

const task = tasks.find(task => task.id === id);

response.json(task);

});Now app.get("/api/tasks/:id", ...) will handle all HTTP GET requests that are of the form /api/tasks/SOMETHING, where SOMETHING is an arbitrary string.

The id parameter in the route of a request can be accessed through the request object:

const id = request.params.id;JavaScript array's find is used to find the task with an id that matches the parameter.

The task is then returned to the sender of the request.

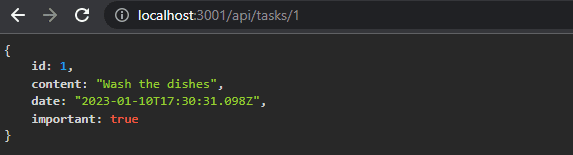

When we test our application by going to http://localhost:3001/api/tasks/1 in our browser, we notice that it does not appear to work, as the browser displays an empty page. This comes as no surprise to us as software developers, and it's time to debug.

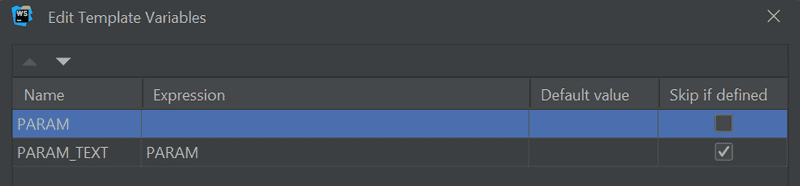

As I've been doing more with live templates, I decided to modify JetBrains log live template Settings->Editor->Live Templates->JavaScript->log.

Here's the template text that I used.

console.log('$PARAM_TEXT$ =', $PARAM$)$END$

Afterwards, I decided to edit the variables in the live template so they look like this:

Now we can add log commands to our code for id and task.

So type log, Enter, id, Enter(2x), and you get the console.log statement that shows below.

You could also add the line numbers and file names, but since I have clog already, I'll use that in those instances.

app.get("/api/tasks/:id", (request, response) => {

const id = request.params.id;

console.log("id =", id);

const task = tasks.find(task => task.id === id);

console.log("task =", task);

response.json(task);

});When we visit http://localhost:3001/api/tasks/1 again in the browser,

the server console - which is the terminal where you ran npm run dev - will display the following:

The id parameter from the route is passed to our application but the find method does not find a matching task.

To further our investigation, we also add a console.log inside the comparison function passed to the find method.

To do this, we have expand tasks.find's compact arrow function syntax task => task.id === id to use an explicit return statement.

Also, while we are here, I used our clog and log live templates to generate all of the console.log statements.

Try using those shortcuts here!

Notice that even with our log live template, we can have some complex statements that appear twice, like task.id === id.

app.get("/api/tasks/:id", (request, response) => {

const id = request.params.id;

console.log("id(" + typeof id + ") =", id, " | index.js:36 - ");

const task = tasks.find(task => {

console.log("task.id(" + typeof task.id + ") =", task.id, " | index.js:38 - ");

console.log("task.id === id =", task.id === id);

return task.id === id;

});

console.log("task =", task);

response.json(task);

});When we visit the URL again in the browser, each call to the comparison function prints a few different things to the console. Here is the first part of that console output:

id(string) = 1 | index.js:36 -

task.id(number) = 1 | index.js:38 -

task.id === id = false

task.id(number) = 2 | index.js:38 -

task.id === id = false

task.id(number) = 3 | index.js:38 -

task.id === id = false

task = undefinedThe cause of the bug becomes clear.

The id variable contains a string "1", whereas the ids of tasks are integers.

In JavaScript, the "triple equals" comparison === considers all values of different types to not be equal by default, meaning that 1 is not "1".

Let's fix the issue by changing the id parameter from a string into a number:

app.get("/api/tasks/:id", (request, response) => {

const id = Number(request.params.id); const task = tasks.find(task => task.id === id);

response.json(task);

});Now fetching an individual resource works.

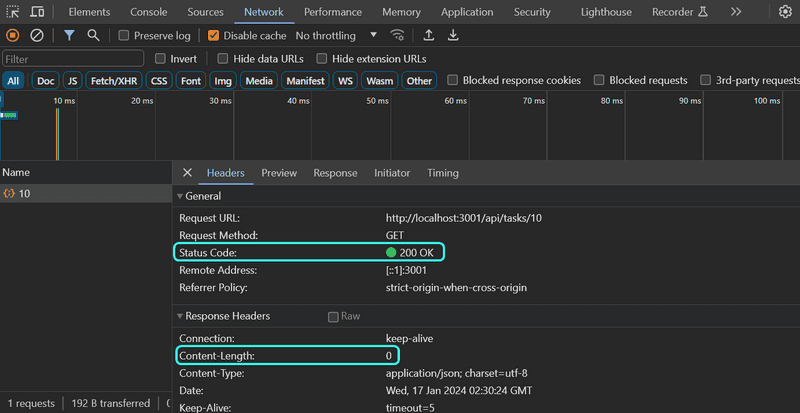

However, there's another problem with our application.

If we search for a task with an id that does not exist, the server responds with:

The HTTP status code that is returned is 200, which means that the response succeeded. 🐞

There is no data sent back with the response, since the value of the content-length header is 0, and the same can be verified from the browser. 🐞

The reason for this behavior is that the task variable is set to undefined if no matching task is found.

The situation needs to be handled on the server in a better way.

If no task is found, the server should respond with the status code 404 not found instead of 200.

Let's make the following change to our code:

app.get("/api/tasks/:id", (request, response) => {

const id = request.params.id;

const task = tasks.find(task => task.id === id);

if (task) { response.json(task); } else { response.status(404).end(); }});Since no data is attached to the response, we use the status method for setting the status and the end method

for responding to the request without sending any data.

The if condition leverages the fact that all JavaScript objects are truthy,

meaning that they evaluate to true in a comparison operation.

However, undefined is falsy meaning that it will evaluate to false.

Our application works and sends the error status code if no task is found. However, the application doesn't return anything to show to the user, like web applications normally do when we visit a page that does not exist. We do not need to display anything in the browser because REST APIs are interfaces that are intended for programmatic use, and the error status code is all that is needed.

Anyway, it's possible to give a clue about the reason for sending a 404 error by overriding the default NOT FOUND message.

Deleting resources

Next, let's implement a route for deleting resources. Deletion happens by making an HTTP DELETE request to the URL of the resource:

app.delete("/api/tasks/:id", (request, response) => {

const id = request.params.id;

tasks = tasks.filter(task => task.id !== id);

response.status(204).end();

});If deleting the resource is successful, meaning that the task exists and is removed,

we respond to the request with the status code 204 no content and return no data with the response.

There's no consensus on what status code should be returned to a DELETE request if the resource does not exist. The only two options are 204 and 404. For the sake of simplicity, our application will respond with 204 in both cases.

Postman

So how do we test the delete operation? HTTP GET requests are easy to make from the browser. We could write some JavaScript for testing deletion, but writing test code is not always the best solution in every situation.

Many tools exist for making the testing of backends easier.

One of these is a command line program curl.

However, instead of curl, we will take a look at using Postman for testing the application.

Postman is a popular tool for testing APIs, and I'm certain that some of you have heard of it already.

If you'd like, you can install Postman following the directions below, but it is not required.

You can install the Postman desktop client to try it out:

| Windows | Mac |

|---|---|

winget install -e postman |

brew install --cask postman |

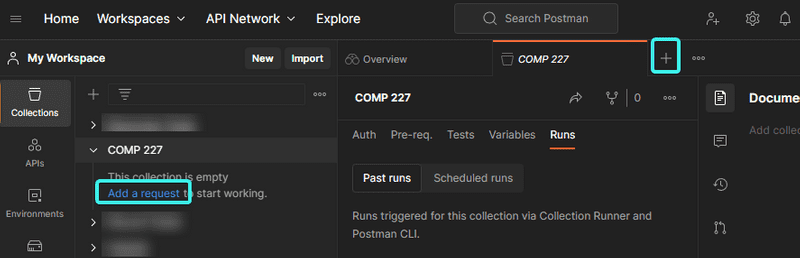

Create an account, then a personal workspace and then create a collection. I named my collection COMP 227. You'll then add a request, which is a link provided on the side of postman.

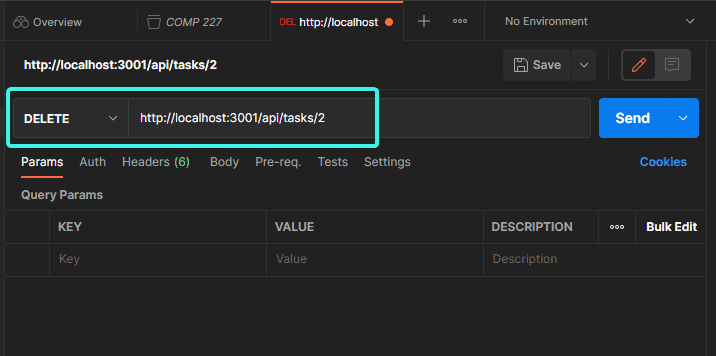

While there is a lot of lingo and terminology to sift through, once you get to the request page, it becomes much more manageable.

It's enough to define the URL and then select the correct request type (DELETE).

The backend server appears to respond correctly.

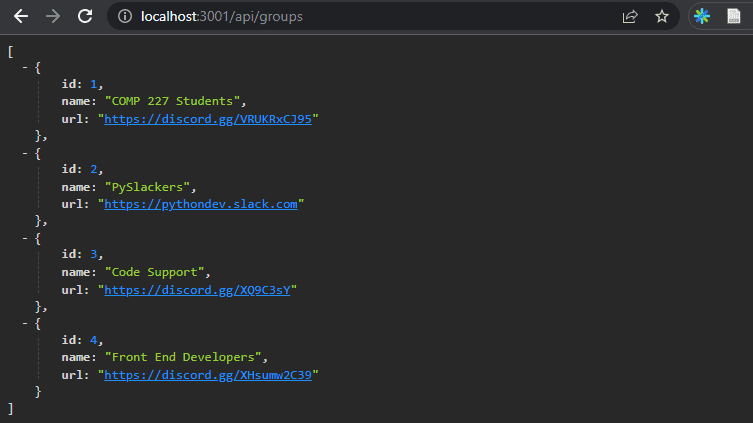

By making an HTTP GET request to, or just visiting http://localhost:3001/api/tasks,

we see that the task with the id 2 is no longer in the list, which indicates that the deletion was successful.

Currently, the tasks in the application are hard-coded and not yet saved in a database, so the list of tasks will reset to its original state when we restart the application.

WebStorm REST client

While Postman has become fairly popular due to all of its options, in our case we will just use WebStorm's REST client instead of Postman.

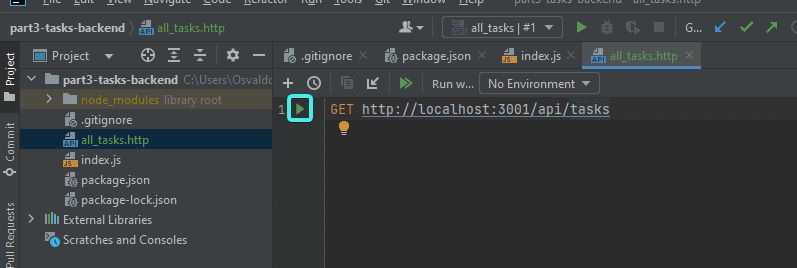

To use the rest client, right-click on the backend-reading folder and select New->HTTP Request.

Give it the name all_tasks and then you'll see a file named all_tasks.http.

We'll use that file to define a request that fetches all tasks.

FYI: Make sure to use

httpand nothttpsfor these examples.

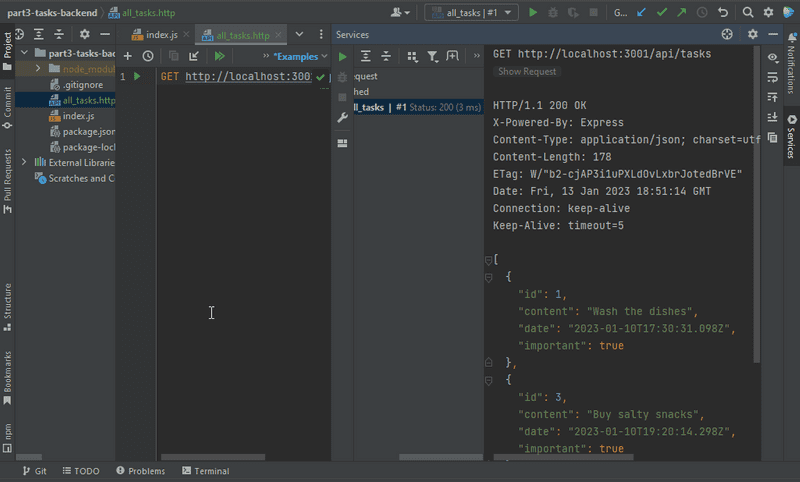

By clicking the highlighted play button, the REST client will execute the HTTP request and the response from the server is opened in the Services pane.

Receiving data

Next, let's make it possible to add new tasks to the server. Adding a task happens by making an HTTP POST request to the address http://localhost:3001/api/tasks, and by sending all the information for the new task in the request body in JSON format.

To access the data easily, we need the help of Express's JSON parser.

We can use the parser by adding the command app.use(express.json()).

Let's activate the JSON parser in index.js and implement an initial handler for dealing with the HTTP POST requests:

const express = require("express");

const app = express();

app.use(express.json());

//...

app.post("/api/tasks", (request, response) => { const task = request.body; console.log("task =", task); response.json(task);});The event handler function can access the data from the body property of the request object.

Without the JSON parser, the body property would be undefined.

The JSON parser:

- takes the JSON data of a request,

- transforms it into a JavaScript object and

- attaches it to the

bodyproperty of therequestobject

It does all of this before the route handler is called.

FYI: For the time being, the application does not do anything with the received data besides printing it to the console and sending it back in the response.

Before we implement the rest of the application logic, let's verify that the data is in fact received by the server. To do this, we'll need to be able to send data to the server. We'll show how to POST requests in Postman and WebStorm.

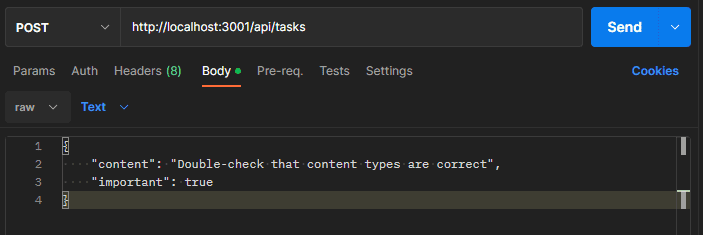

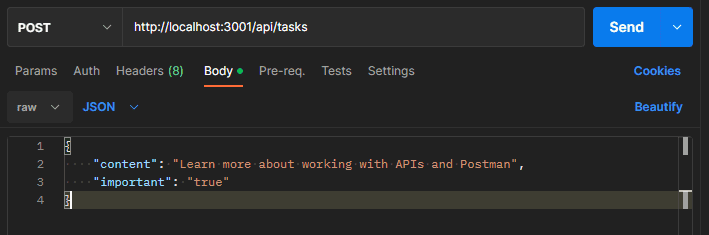

In Postman, the picture below shows how also have to define the data sent in the body:

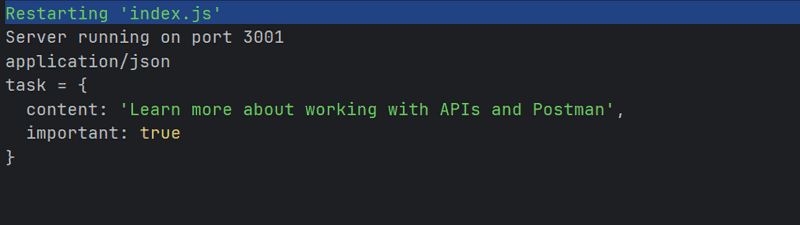

You'll need to restart the backend to have express be used. Once the backend restarts and you send the POST request, the application prints the data that we sent in the request to the console:

Remember: When programming the backend, keep the console running the application visible at all times. The development server will restart if changes are made to the code, so by monitoring the console, you will immediately notice if there is an error in the application's code:

Similarly, it is useful to check the console to make sure that the backend behaves as we expect it to in different situations, like when we send data with an HTTP POST request. Any

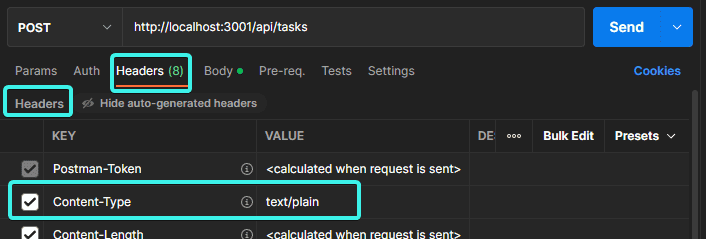

console.logcommands that you have put in temporarily for development will also appear here.A potential cause for issues is an incorrectly set

Content-Typeheader in requests. This can happen with Postman if the type of body is not defined correctly:The

Content-Typeheader is set totext/plain:The server appears to only receive an empty object:

The server will not be able to parse the data correctly without the correct value in the header. It won't even try to guess the format of the data since there's a massive amount of potential Content-Types.

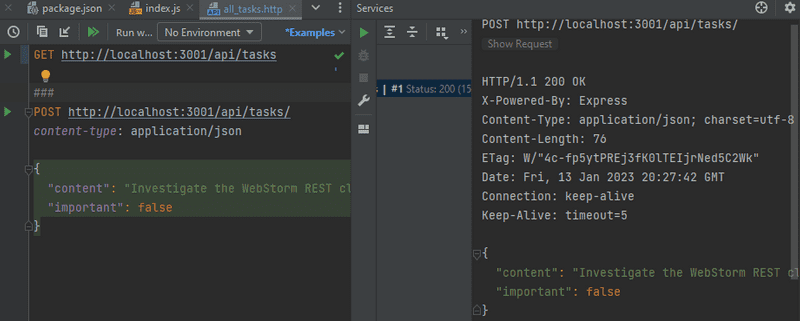

With WebStorm, the POST request can be sent as appended text in the REST client like this:

One benefit that the WebStorm REST client has over Postman is that the requests are handily available at the root of the project repository, and they can be distributed to everyone in the development team.

FYI: Postman does allow users to save requests, but the situation can get quite chaotic especially when you're working on multiple unrelated projects.

Notice in the picture above that we are also able to add the POST request in same file using ### separators:

### GET request to example server

GET http://localhost:3001/api/tasks

### POST request to create a new task

POST http://localhost:3001/api/tasks

Content-Type: application/json

{

"content": "Learn more about working with APIs and Postman",

"important": true

}You can use the play button next to the line numbers to run the request you'd like.

About debugging and using requests

Sometimes when you're debugging, you may want to find out what headers have been set in the HTTP request. One way of accomplishing this is through the

getmethod of therequestobject, that can be used for getting the value of a single header. Therequestobject also has the headers property, that contains all of the headers of a specific request.Problems can occur with the WebStorm REST client if you accidentally add an empty line between the top row and the row specifying the HTTP headers. In this situation, the REST client interprets this to mean that all headers are left empty, which leads to the backend server not knowing that the data it has received is in the JSON format.

You will be able to spot this missing Content-Type header if you print the request headers via

console.log('request.headers=', request.headers).

Once we know that the application receives data correctly, it's time to finalize the handling of the request:

app.post("/api/tasks", (request, response) => {

const maxId = tasks.length > 0

? Math.max(...tasks.map(t => Number(t.id)))

: 0;

const task = request.body;

task.id = String(maxId + 1);

tasks = tasks.concat(task);

response.json(task);

});We need a unique id for the task.

First, we find out the largest id number in the current list and assign it to the maxId variable.

The id of the new task is then defined as maxId + 1 as a string.

This method is not recommended, but we will live with it for now as we will replace it soon enough.

The current version still has the problem that the HTTP POST request can be used to add objects with arbitrary properties.

Let's improve the application by defining that the content property may not be empty.

The important and date properties will be given default values.

All other properties are discarded:

const generateId = () => {

const maxId = tasks.length > 0

? Math.max(...tasks.map(t => Number(t.id)))

: 0;

return String(maxId + 1);

};

app.post("/api/tasks", (request, response) => {

const body = request.body;

if (!body.content) {

return response.status(400).json({

error: "content missing"

});

};

const task = {

id: generateId(),

content: body.content,

important: body.important || false,

date: new Date().toISOString(),

};

tasks = tasks.concat(task);

response.json(task);

});The logic for generating the new id number for tasks has been extracted into a separate generateId function.

If the received data is missing a value for the content property, the server will respond to the request with the status code

400 bad request:

if (!body.content) {

return response.status(400).json({

error: "content missing"

});

}Notice that calling return is crucial because otherwise the code will execute to the very end and the malformed task gets saved to the application.

If the content property has a value, the task will be based on the received data.

As mentioned previously, it is better to generate timestamps on the server than in the browser,

since we can't trust that the host machine running the browser has its clock set correctly.

The generation of the date property is now done by the server.

If the important property is missing, we will default the value to false.

The default value is currently generated in a rather odd-looking way:

important: body.important || false,If the data saved in the body variable has the important property, the expression will evaluate to its value and convert it to a boolean value.

If the property does not exist, then the expression will evaluate to false which is defined on the right-hand side of the vertical lines.

To be exact, when the

importantproperty isfalse, then thebody.important || falseexpression will in fact returnfalsefrom the right-hand side...

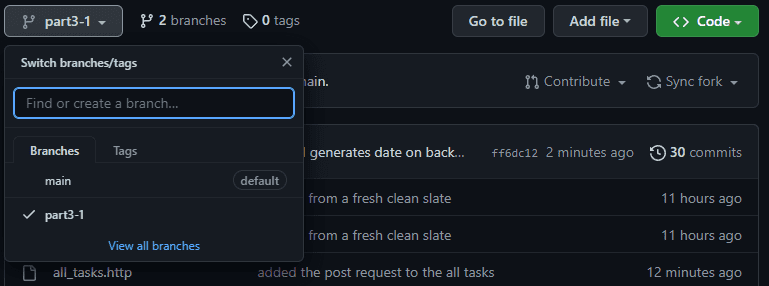

You can find the code for our current application in its entirety in the part3-1 branch of our backend repo.

If you clone the project, run the npm i command before starting the application with npm start or npm run dev.

Pertinent: In the function we have for generating IDs:

const generateId = () => { const maxId = tasks.length > 0 ? Math.max(...tasks.map(t => Number(t.id))) : 0; return String(maxId + 1); };This part of the highlighted line may look intriguing:

Math.max(...tasks.map(t => Number(t.id)))What exactly is happening in that line of code? Let's take an example of say three tasks with ids

1,2, and3

tasks.map(t => Number(t.id))creates a new array that contains all the IDs of the tasks[1, 2, 3]in number form.Math.maxreturns the maximum value of the numbers that are passed to it.However,

tasks.map(t => Number(t.id))is an array so it can't directly be given as a parameter toMath.max. The array can be transformed into individual numbers by using the "three dot..." spread syntax

- So 🚫

Math.max([1, 2, 3])🚫 becomesMath.max(1, 2, 3)🥳.

About HTTP request types

The HTTP standard talks about two properties related to request types, safety and idempotency.

The HTTP GET request should be safe:

*In particular, the convention has been established that the GET and HEAD methods SHOULD NOT have the significance of taking an action other than retrieval. These methods ought to be considered "safe".*

Safety means that the executing request must not cause any side effects on the server. By side effects, we mean that the state of the database must not change as a result of the request, and the response must only return data that already exists on the server.

Nothing can ever guarantee that a GET request is safe, this is just a recommendation that is defined in the HTTP standard.

By adhering to RESTful principles in our API, GET requests are always used in a way that they are safe.

The HTTP standard also defines the request type HEAD,

which ought to be safe.

In practice, HEAD should work exactly like GET but it does not return anything but the status code and response headers.

The response body will not be returned when you make a HEAD request.

All HTTP requests except POST should be idempotent:

Methods can also have the property of "idempotence" in that (aside from error or expiration issues) the side-effects of N > 0 identical requests is the same as for a single request. The methods

GET,HEAD,PUTandDELETEshare this property

This means that if a request does generate side effects, then the result should be the same regardless of how many times the request is sent.

If we make an HTTP PUT request to the URL /api/tasks/10 and with the request we send the data { content: "no side effects!", important: true },

the result is the same regardless of how many times the request is sent.

Like safety for the GET request, idempotence is also just a recommendation in the HTTP standard

and not something that can be guaranteed simply based on the request type.

However, when our API adheres to RESTful principles, then GET, HEAD, PUT, and DELETE requests are used in such a way that they are idempotent.

POST is the only HTTP request type that is neither safe nor idempotent.

If we send 5 different HTTP POST requests to /api/tasks with a body of

{content: "many same", important: true}, the resulting 5 tasks on the server will all have the same content.

Middleware

The Express JSON parser we used earlier is called middleware.

Middleware are functions that can be used for handling request and response objects.

As a reminder, the JSON parser we used earlier:

- takes the raw data from the requests that are stored in the

requestobject - parses it into a JavaScript object

- assigns it to the

requestobject as a new propertybody.

In practice, you can use several middlewares at the same time. When you have more than one, they're executed one by one in the order that they were listed in the application code.

Let's implement our own middleware function that prints information about every request that is sent to the server.

For a function to be middleware it needs to receive three parameters:

const requestLogger = (request, response, next) => {

console.log("Method:", request.method);

console.log("Path: ", request.path);

console.log("Body: ", request.body);

console.log("---");

next();

};At the end of the function body, the next function that was passed as a parameter is called.

The next function yields control to the next middleware.

Middleware is used like this:

app.use(requestLogger)Remember, middleware functions are called in the order that they're encountered by the JavaScript engine.

Notice that json-parser is listed before the requestLogger,

because otherwise request.body will not be initialized when the logger is executed!

Middleware functions have to be used before routes when we want them to be executed by the route event handlers. Sometimes, we want to use middleware functions after routes. We do this when the middleware functions are only called if no route handler processes the HTTP request.

Let's add the following middleware after our routes. This middleware will be used for catching requests made to non-existent routes. For these requests, the middleware will return an error message in the JSON format.

const unknownEndpoint = (request, response, next) => {

response.status(404).send({ error: "unknown endpoint" });

next();

};

app.use(unknownEndpoint);You can find the code for our current application in its entirety in the part3-2 branch of our backend repo.